An easy-to-use & supercharged open-source AI metadata tracker

Aim logs all your AI metadata (experiments, prompts, etc) enables a UI to compare & observe them and SDK to query them programmatically.

Check out our GithubTrusted by ML teams from

Aim connects and integrates with your favorite tools

The Aim standard package comes with all integrations. If you'd like to modify the integration and make it custom, create a new integration package and share with others.

Learn more

Get started

in under a minute and on any environment

pip install aimimport aim

import math

# Initialize a new run

run = aim.Run()

# Log hyper-parameters

run["hparams"] = {

"learning_rate": 0.001,

"batch_size": 32,

}

# Log metrics

for step in range(100):

run.track(math.sin(step), name='sine')

run.track(math.cos(step), name='cosine')aim upNow check out our GitHub repo or documentation to learn more

Why use Aim?

Aim is an open-source, self-hosted AI Metadata tracking tool designed to handle 100,000s of tracked metadata sequences.

Two most famous AI metadata applications are: experiment tracking and prompt engineering.

Aim provides a performant and beautiful UI for exploring and comparing training runs, prompt sessions.

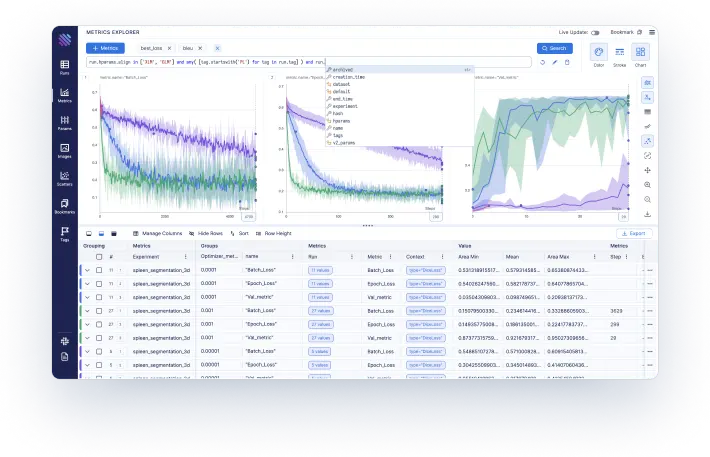

Compare runs easily to build models faster

Compare runs easily to build models faster

- Group and aggregate 100s of metrics

- Analyze and learn correlations

- Query with easy pythonic search

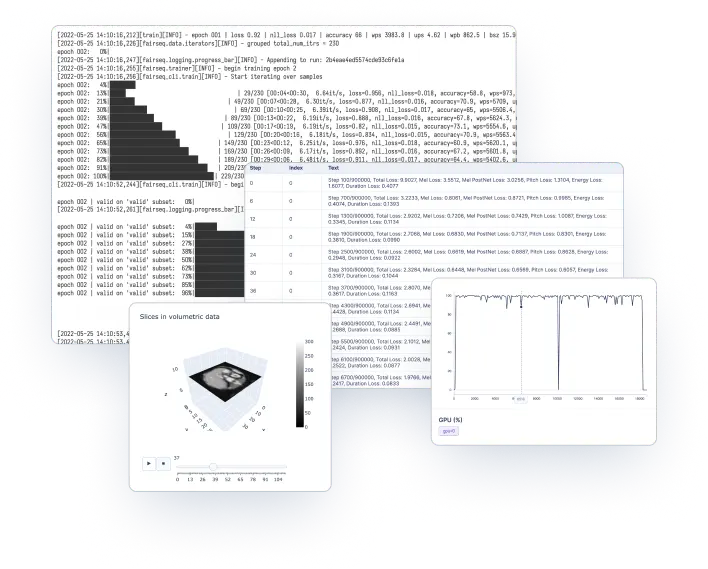

Deep dive into details of each run for easy debugging

Deep dive into details of each run for easy debugging

- Explore hparams, metrics, images, distributions, audio, text, ...

- Track plotly and matplotlib plots

- Analyze system resource usage

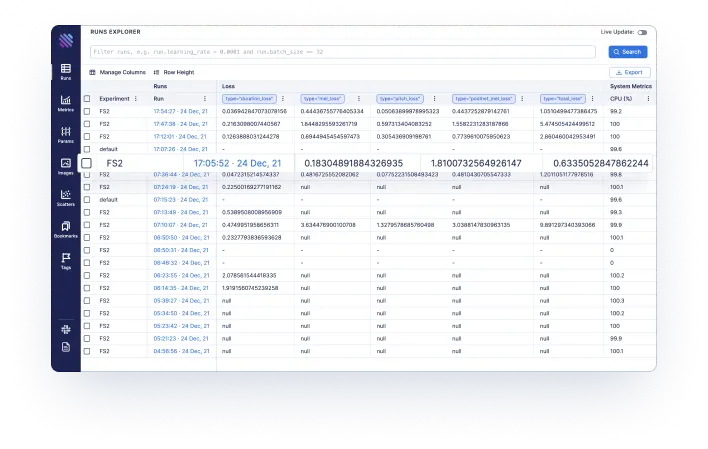

Have all relevant information centralized for easy governance

Have all relevant information centralized for easy governance

- Centralized dashboard to view all your runs

- Use SDK to query/access tracked runs

- You own your data - Aim is open source and self hosted.

Demos

Play with Aim before installing it! Check out our demos to see the full functionality

Machine translation

Training logs of a neural translation model(from WMT'19 competition).

lightweight-GAN

Training logs of 'lightweight' GAN, proposed in ICLR 2021.

FastSpeech 2

Training logs of Microsoft's "FastSpeech 2: Fast and High-Quality End-to-End Text to Speech".

Simple MNIST

Simple MNIST training logs.

Subscribe to Our Newsletter

Subscribe to our newsletter to receive regular updates about our latest releases, tutorials and blog posts!