Hey team, Aim 3.6 featuring Mlflow logs converter, Chart export, PyTorch Ignite & Activeloop Hub integrations is now available!

We are on a mission to democratize MLOps tools. Thanks to the awesome Aim community for the help and contributions.

Here is what’s new in addition to Aim 3.5.

- Export chart as image

- MLflow to Aim logs converter

- Pytorch Ignite integration

- Activeloop Hub integration

- Wildcard support for aim runs subcommands

Special thanks to krstp, ptaejoon, farizrahman4u from Activeloop, vfdev-5 from PyTorch Ignite, hughperkins, Ssamdav and mahnerak for feedback, reviews and help.

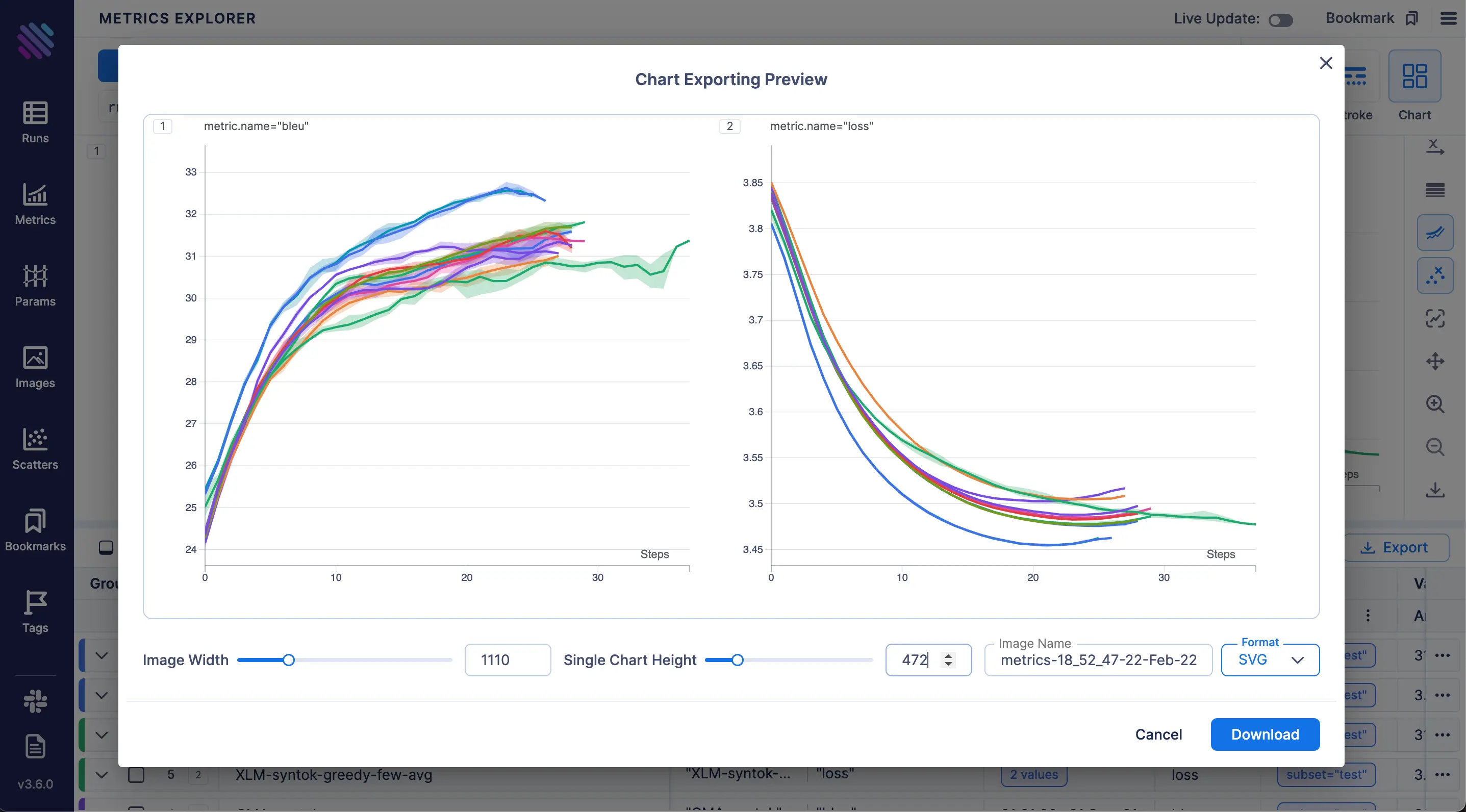

Export chart as image

Hey awesome Aim community, sorry for shipping this feature so late. We know it has been so highly requested, just couldn’t get our hands on it over and over again.

Now the images export is here! You can export charts as JPEG, PNG and SVG so you can include the awesome Aim visuals in your reports and research papers.

You can control the width, height, the format of the downloadable image.

The ability to add / edit legends will be added in the coming versions.

Would still love to hear feedback on how would you like us to tune this feature.

MLflow to Aim logs converter

Its been also requested across the board by many users. Now you can convert your MLflow logs to Aim so you can compare all of them regardless on where it was tracked.

Here is how it works:

$ aim init

$ aim convert mlflow --tracking_uri 'file:///Users/aim_user/mlruns'Besides the metrics, You can convert Images, Texts and Sound/Audios. More on that in here.

Pytorch Ignite integration

For all the Aim users who use PyTorch Ignite, there is now a native PI callback available so you can track your basic metrics automatically!

Here is a code snippet on how to use the PI integration:

# call aim sdk designed for pytorch ignite

from aim.pytorch_ignite import AimLogger

# track experimential data by using Aim

aim_logger = AimLogger(

experiment='aim_on_pt_ignite',

train_metric_prefix='train_',

val_metric_prefix='val_',

test_metric_prefix='test_',

)

# track experimential data by using Aim

aim_logger.attach_output_handler(

train_evaluator,

event_name=Events.EPOCH_COMPLETED,

tag="train",

metric_names=["nll", "accuracy"],

global_step_transform=global_step_from_engine(trainer),

)

Activeloop Hub integration

Excited to share that thanks to an integration with Activeloop, starting Aim 3.6 you can add your Hub dataset info as a run param and use them to search and compare your runs across all Explorers.

Here is a code snippet to demonstrate the usage:

import hub

from aim.sdk.objects.plugins.hub_dataset import HubDataset

from aim.sdk import Run

# create dataset object

ds = hub.dataset('hub://activeloop/cifar100-test')

# log dataset metadata

run = Run(system_tracking_interval=None)

run['hub_ds'] = HubDataset(ds)The following information becomes available among others:

run.hub_ds.version

run.hub_ds.num_samples

run.hub_ds.tensors.name

run.hub_ds.tensors.compression_typeHub is an awesome tool to build, manage, query & visualize datasets for deep learning, as well as stream data real-time to PyTorch/TensorFlow & version-control it. Check out the Hub docs.

Wildcard support for the `aim runs` command

On Aim 3.5 we made available the run mv command which allows to move single runs between folders.

Now we have also added the wildcard to move the whole folder altogether

Here is the CLI interface:

$ aim runs --repo <source_repo/.aim> mv * --destination <dest_repo/.aim>Learn More

Aim is on a mission to democratize AI dev tools.

We have been incredibly lucky to get help and contributions from the amazing Aim community. It’s humbling and inspiring.

Try out Aim, join the Aim community, share your feedback, open issues for new features, bugs.

And don’t forget to leave Aim a star on GitHub for support 🙌.